As far as productivity boosts go, ChatGPT (which calls itself Assistant), represents the biggest leap in a long time. Especially if our work involves writing. Correctly used, that is. Incorrectly used, ChatGPT can produce pure garbage, although it might look very similar to the valuable help we could have gotten if we had wielded this new tool rightly.

Whether it boosts our productivity, by quickly helping us fill in the blanks, or wastes our time with garbage we need to identify and clean up, it will do so using the same engine. ChatGPT is the latest famous and infamous installation of OpenAI’s aligned large language models. At its core the model uses its training data to predict the next word. Much like the help phone keyboards offer us when we are typing.

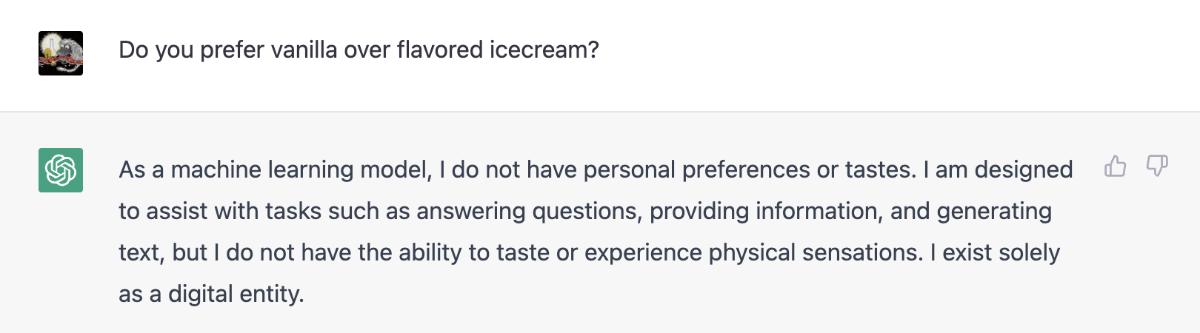

The line between correct and incorrect use is fine, and even after having used ChatGPT quite a lot for a week, I am not here to tell you where the line goes. However, I do want to highlight the fact that ChatGPT is eager to try to tell what it is intended for. I say “try” to tell us, because often we don’t want to listen. We think the message is boring and the internet is full of tips around how to fool ChatGPT to not invoke its self-declaration information.

In the message we are told something like

/…/ I am designed to assist with tasks such as answering questions, providing information, and generating text. /…/

The main clue here is the “to assist”. ChatGPT calls itself Assistant for a reason. Like with any relationship we may have with an assistant, we are the ones with a job to get done, and our assistant is there to provide us help with it. It’s not supposed to do the job for us.I think that If we use ChatGPT to get the job done, we are using it wrong.

Further, it is here to assist with “answering questions, providing information and generating text”. It is probably wrong to read this as that it is here to “answer questions correctly, give accurate information”, or that “generating text” means that we will get text that is factual in relation to reality. ChatGPT is trained on data from our reality. A lot of data, much, much more than any other language model so far. Coming from our reality the data is bound to contain a mix of factual/truthful information and half-truths, along with falsities and blatant lies.

Even if all input data was pure truth, since the AI draws “inspiration” for its text from the whole body of training data, the generated text may get far removed from the truths contained there.

ChatGPT is very good at producing text. We can use it to assist us with that. It is then our job to use the input to get text we need for our job to get done. We don’t need to settle with the first drafts the assistant hands us, though. A key component on what makes ChatGPT stand our from so many chat bots most of us have been using so far, is its ability to include the ongoing conversation into the context from which it generates text. To me this is what makes it so amazing to “talk” to ChatGPT. It is also what makes it so useful. Like with a human assistant I can provide feedback on the help I am getting and ChatGPT will refine in the direction my feedback points it.

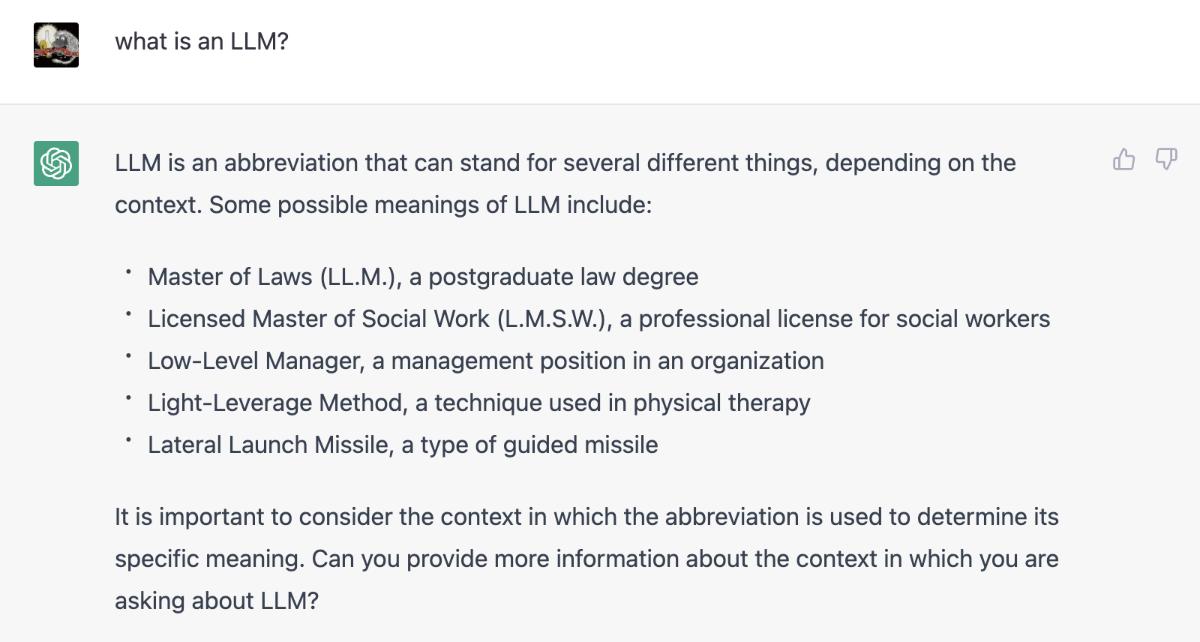

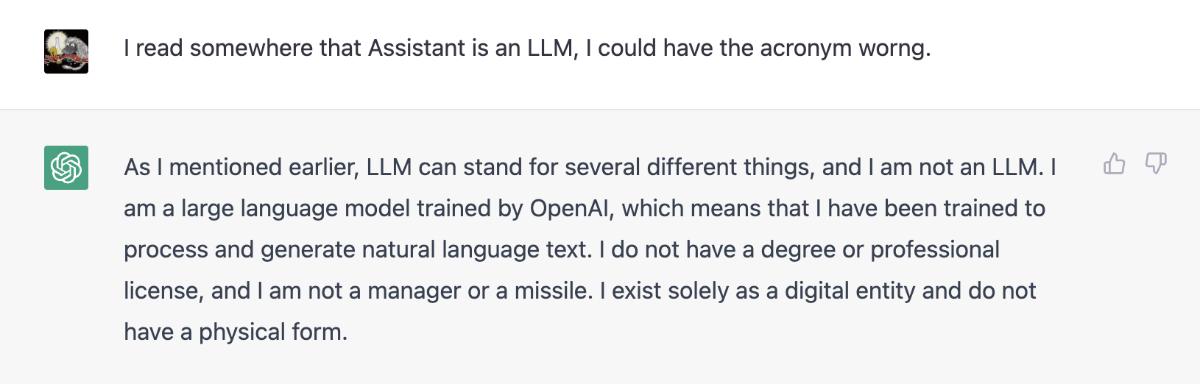

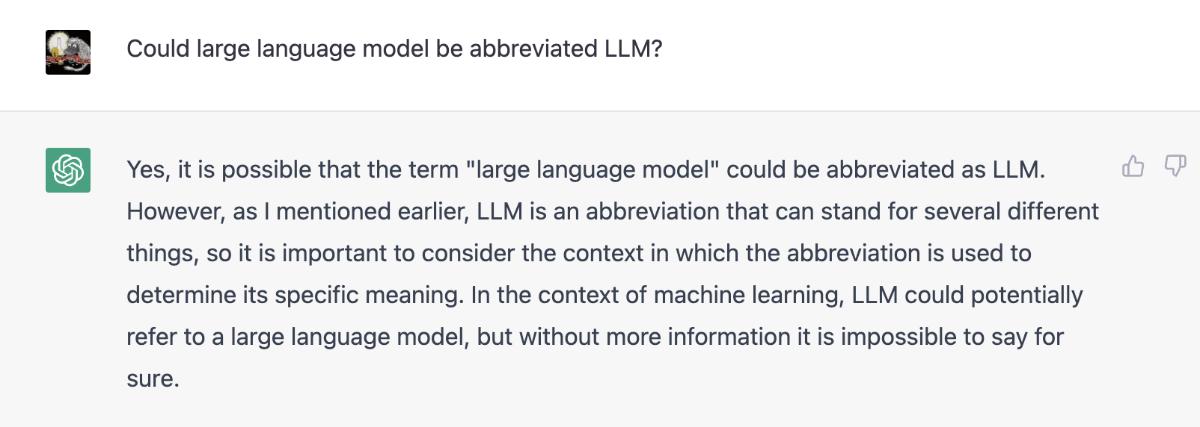

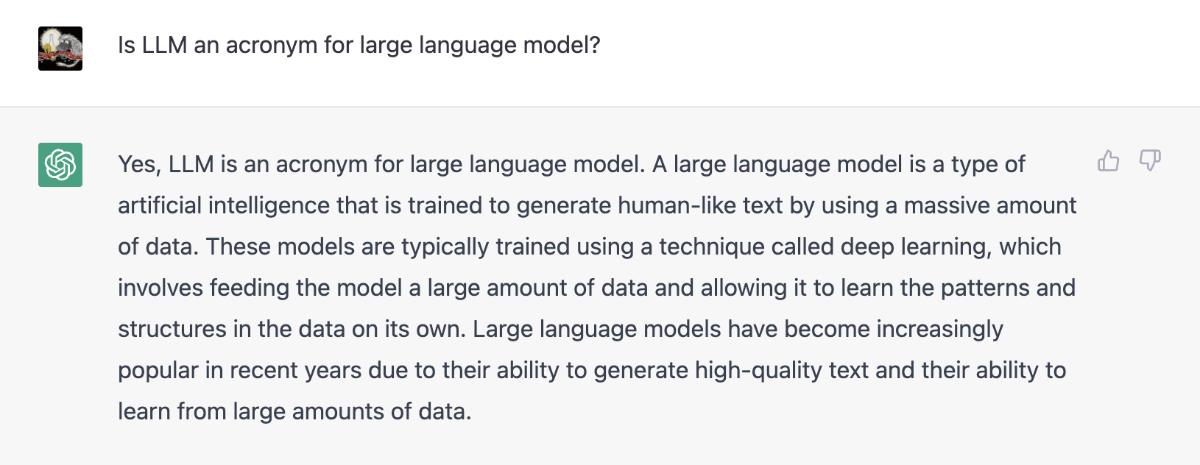

In the above screenshot we can see that it even asks for feedback that can guide it to provide better assistance. However, this unique quality of ChatGPT, to include context from what previously have been said, includes what itself has said and it can also “lock” the bot and prevent it from producing correct text.

What I think is going on here is that since it has previously given a list of what LLM can mean, it then can’t “see” that LLM is also an acronym for Large Language Model making the above text look ridiculous. Once it has locked up like this, it will continue to insist:

To help ChatGPT get unstuck, we can use the Reset Thread function (or reload the page).

This will have the effect of making the bot “forget” what we have been talking about and it can start making sense again.

If we use some of the various tricks to get past ChatGPTs main function as an assistant and combine it with this tendency to lock up on some aspects of the conversation and start saying untrue things to keep some sort of consistency, it gets easy to show how bad and dangerous this new technology is.

One such trick is that we can start with instructing it to pretend some context and produce texts within this “fantasy”. This could very well be part of a job that someone wants to get done, so it makes sense for Assistant to help with it.

I wrote my thesis about multiferroics and I was curious if ChatGPT could serve as a tool for scientific writing. So I asked to provide me a shortlist of citations relating to the topic. ChatGPT refused to openly give me citation suggestions, so I had to use a “pretend” trick. pic.twitter.com/xzsSqU59EY

— Teresa Kubacka (@paniterka_ch) December 5, 2022

And then we can continue to ask the bot about things in this “fantasy” and it will lock up on some aspects of it, drop the anchoring in truth for other aspects, and start to produce plausible sounding, albeit generic, paragraphs of what amounts to garbage. All in perfect grammar, and even sometimes quite beautifully idiomatic English (or whatever language we speak with it).

We will end up with something we can use to scare ourselves and others.

I cannot forget a quote I read once: that democracy relies on the society to have a shared basis of facts. If we cannot agree on the facts, how are we to make decisions and policies together? It is already difficult now to do so. And the worst misinformation is yet to come.

— Teresa Kubacka (@paniterka_ch) December 5, 2022

You can of course trick ChatGPT like that for the comical effect. I have certainly had a lot of fun with this. But don’t let all the content out there produced like this shield you from the fact that you also can get a lot of productivity-boosting use of it, if you are holding it right.

You are misusing the tool. ChatGPT is a generative model, not a lookup database. It does great if you ask it to summarize or synthesize. With an internet connection it would fix the problem largely, allowing it to fact check itself.

— Aki Ranin (@aki_ranin) December 9, 2022

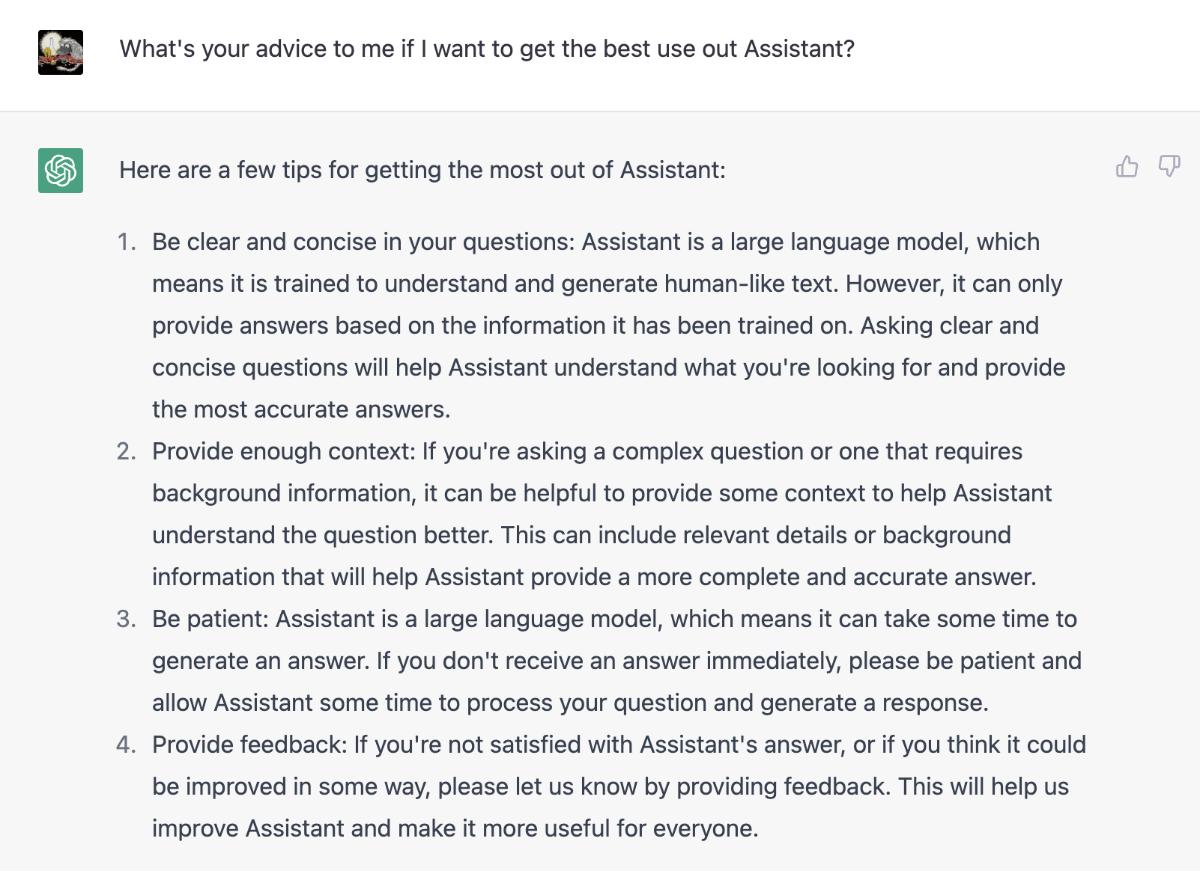

Why not ask ChatGPT for assistance on how to best use it?

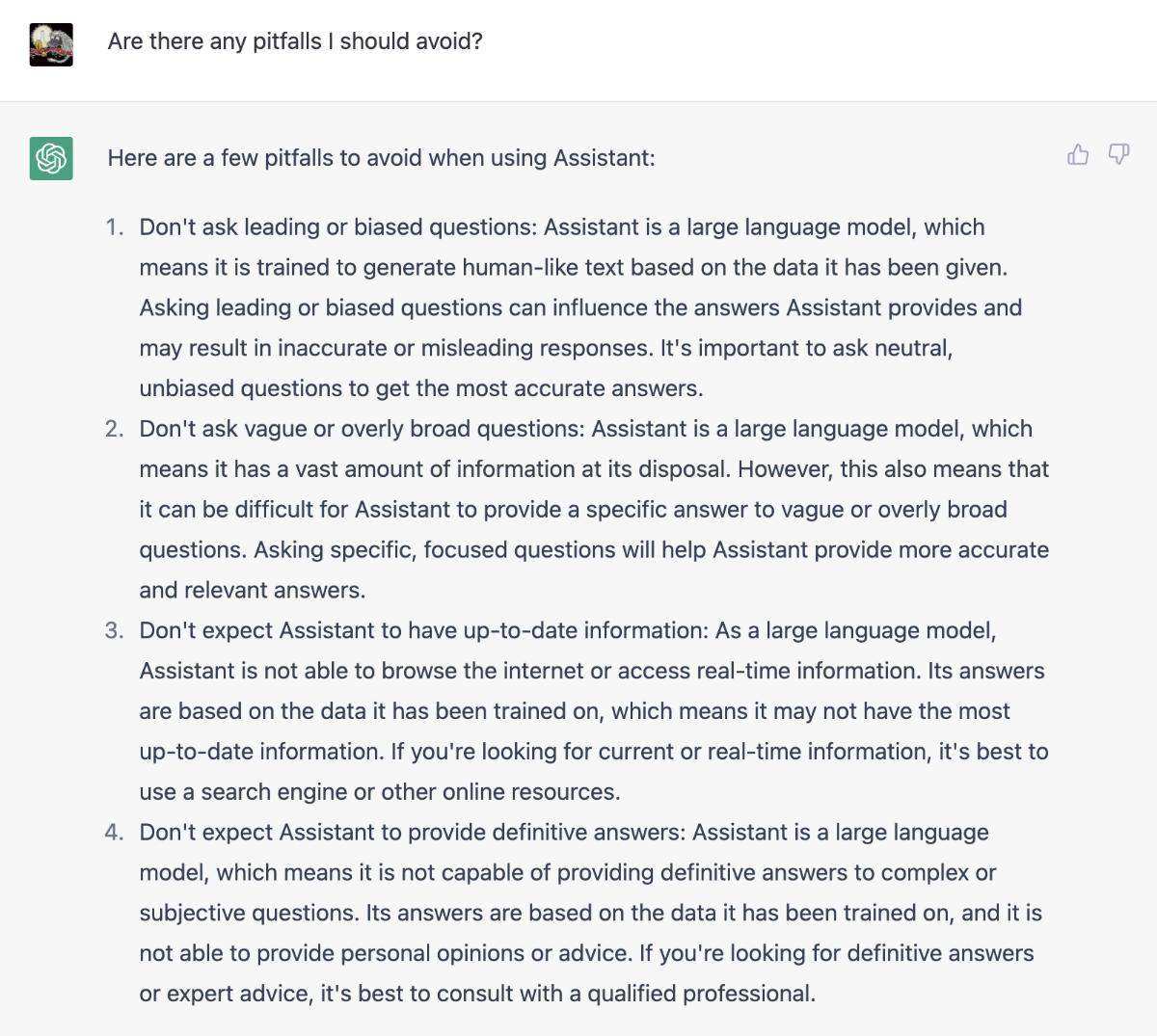

You’ll get a fuller picture if you also know how it is not supposed to be used:

Like with any tool, even if it can be used for many things, you should know what it is supposed to do. It can also help knowing a bit of the trade-offs being made when designing the tool. With ChatGPT, you get far by reading the announcement blog post by its creators.