Here’s how I created a fully automated, weekly newsletter using only RSS, Buttondown, and Google Sheets. Full source code included!

I run a hobby project (Cospaia, mostly in Swedish, but it’s not important for this article) together with some friends. We wanted a weekly automated newsletter. When figuring out how to do this, I compared it to a commercial project I’m involved with, where we use Netlify Forms and Mailchimp, with Zapier as glue. As Mailchimp is pretty crappy, and has a history of biting me really hard (deleting my subscriber database), and we are not hosting the Cospaia site using Netlify, I looked around for other ways to do this one. In the Cospaia project, instead of Netlify we are using Vercel, so Vercel Functions came to mind. I didn’t immediately find something however, like I did with Netlify Forms. This doesn’t mean there isn’t an easy path here, just that Brave Search found something else for me first. It was actually also involving Netlify Forms, but instead of Mailchimp there was something else. It looked super interesting and relevant to what I needed: Buttondown! But before digging into the solution, let’s have a look at my requirements.

We want to send a weekly newsletter, automatically summarizing the two main sections of our site.

The two sections are Articles and Blog.

They both have an RSS feed each.

I want full flexibility for how the summaries are constructed.

Our audience needs a way to sign up for the newsletter.

Both the emails and the signup form need to be clean and simple. Beyond that, not much design fancy required.

It’s not important that the emails are sent using our own domain, nor that the signup is fully hosted on our site.

It’s a hobby project and my budget is very tight.

Simple enough, huh?

It quickly became clear to me that Buttondown ticked all boxes. (N.B. It also supports custom domains and other things I didn’t have as requirements.) It is so slick and easy to get started with, that I very soon (we’re talking less than 20 minutes) was sending myself a test newsletter that looked pretty and had a nice signup form. All using some little Markdown and a really minimal amount of CSS (I wanted to style the images a bit differently than the otherwise excellent default styling). I’m contrasting this to Mailchimp with its clunky editor that I have to fight with to do the simplest things. With Mailchimp's design editor I find it is almost impossible to make things look any good at all. Despite (or perhaps because of) the many templates and themes available there.

Now to the RSS summaries. I had seen somewhere that Buttondown has automation for this. I imagine it is similar to (but probably way, way better than) Mailchimp’s. However, the automation is outside the budget. I started to look at the Buttondown API instead. It’s available on the first paid tier, which is within my tiny budget. However, I didn’t fancy the idea of having to maintain some server for running this. What to do? Cospaia is a static site and published from a Google Sheet (that starts a GitLab CI pipeline responsible for building the site). I decided to use Google Apps Script in this publishing sheet, even if it would mean we have to send the newsletter out manually. Note that the requirements didn’t mention sending automatic weekly newsletters. 😀 That said, plot twist about this trade-off ahead.

“Yada, yada, yada, where’s the code you promised, Peter?” you have been saying for a while now, right? No worries, I’m not going to disappoint. There will now be code. One part of the task here is to grab those RSS feeds, so let’s start there.

function emailFetchFeeds(arcticleRssUrl, blogRssUrl) {

const articlesRss = UrlFetchApp.fetch(arcticleRssUrl).getContentText();

// const articlesRss = SAMPLE_ARTICLES_RSS;

const blogRss = UrlFetchApp.fetch(blogRssUrl).getContentText();

// const blogRss = SAMPLE_BLOG_RSS;

return [articlesRss, blogRss];

}

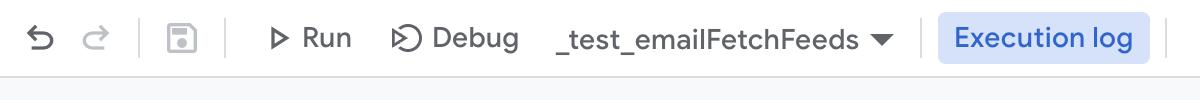

function _test_emailFetchFeeds() {

console.log(emailFetchFeeds(configGet('email_article_rss_url'), configGet('email_blog_rss_url'))

.map(feeds => feeds.substring(0, 1000)));

}Two things to note here. First, the

_test_emailFetchFeeds function is not an automated unit

test thing (I haven’t figured out how to do that yet). It’s how I

manually test that my Apps Scripts code units do what I want them to do.

I create zero-argument functions that I run in the Scripts editor (or

from the command line using clasp).

I’ll not post any more of the _test_ functions in this

article. But I want to recommend the practice!

Second, I have most of the configuration in one of the spreadsheets, using named ranges.

Here’s the lookup code (yes, the publication/newsletter script project is called BabyDriver. Named after my first son’s favorite movie).

function configGet(key) {

const values = SpreadsheetApp.getActiveSpreadsheet().getRangeByName(`BabyDriver Config!${key}`).getValues()[0];

return values.length > 1 ? values.filter(v => v.match(/.+/)) : values[0];

}It supports both single-value ranges, and arrays.

Btw, all code in this article is licensed under the Be Kind License, free to use, at your own risk, for whatever, as long as you are a kind person.

The way I want to summarize the RSS feeds in the newsletter email is

that for each section (Articles and Blog, remember?),

present the fresh post with full “cards” (title, publication date,

author, image, and description). Then I want to have a few of the posts,

published immediately closest to these fresh items, as a

bulleted list, without image and description. See here

for an example of the result. The following function takes care of

parsing the XML, and based on a now timestamp, sorting out

the fresh and the rest for a section and

returning that as a map (object):

function emailGetLatestItems({ xml, now, freshDays, restCount }) {

const parsedXml = XmlService.parse(xml);

const allItems = Array.from(parsedXml.getRootElement().getChild("channel").getChildren("item"));

const freshTimestamp = now - (freshDays * 24 * 60 * 60 * 1000);

const freshItems = allItems

.map(parseItem)

.filter(item => {

return item.pubDate >= freshTimestamp;

});

const restItems = allItems.slice(freshItems.length, freshItems.length + restCount).map(parseItem);

return {

freshItems,

restItems

};

}What’s returned is actually a parsed post item with the information

we need for converting this to markdown later on. This is the function

mapped over the fresh and rest items:

function parseItem(item) {

const title = item.getChildText("title");

const link = item.getChildText("link");

const description = item.getChildText("description");

const pubDateStr = item.getChildText("pubDate");

const pubDate = new Date(pubDateStr).getTime();

const mediaNamespace = XmlService.getNamespace("http://search.yahoo.com/mrss/");

const mediaContent = item.getChild("content", mediaNamespace);

const imageUrl = mediaContent ? mediaContent.getAttribute("url").getValue() : null;

const authorElement = item.getChild("author");

const authorLink = authorElement ? authorElement.getChild("a").getAttribute("href").getValue() : null;

const authorName = authorElement ? authorElement.getChild("a").getText() : null;

return { title, link, description, pubDate, imageUrl, authorLink, authorName };

}I know I promised not to include more _test_ functions,

but this logic is central to the task at hand, and a reason for rolling

my own RSS automation even if I could afford the Buttondown

automation tier (which I can’t for this project, but anyway). So here

goes:

function _test_emailGetLatestItems() {

console.log('articles:', emailGetLatestItems({

xml: SAMPLE_ARTICLES_RSS,

now: new Date().getTime(),

freshDays: 7,

restCount: 5

}))

console.log('blog:', emailGetLatestItems({

xml: SAMPLE_BLOG_RSS,

now: new Date().getTime(),

freshDays: 7,

restCount: 5

}));

}In the configuration used for the real newsletter, I use 15

freshDays, in case you checked the example I linked above,

and thought you had spotted a bug. 😀 Here’s the code that takes it from

a now timestamp, along with two RSS texts to add to the

curated items that we include in the email:

function emailItemsFromRss(now, articlesRss, blogRss) {

const articlesItems = emailGetLatestItems({

xml: articlesRss,

now,

freshDays: configGet('email_latest_articles_days'),

restCount: configGet('email_earlier_articles_count')

});

const blogItems = emailGetLatestItems({

xml: blogRss,

now,

freshDays: configGet('email_latest_blog_days'),

restCount: configGet('email_earlier_blog_count')

});

return [articlesItems, blogItems];

}Since I’ve promised full source code, here’s a utility function used in some places, for formatting publication dates.

function emailFormatPubDate(timestamp) {

const date = new Date(timestamp);

return `${date.getFullYear()}-${String(date.getMonth() + 1).padStart(2, '0')}-${String(date.getDate()).padStart(2, '0')}`;

}(It’s an ISO-date, easily parsed by Swedish humans.)

Buttondown emails are authored in sweet Markdown. I didn’t have time to make up some template language for this, but JavaScript template strings are pretty nice. Thanks to whoever invented those! 🙏

function emailFormatSectionAsMarkdown(items, freshHeader, restHeader) {

const freshItemsMarkdown = items.freshItems.map((item) => {

const title = `### [${item.title}](${item.link})`;

const author = `${item.authorName}, _${emailFormatPubDate(item.pubDate)}_`;

const image = `<div><img src="${item.imageUrl}"></div>`;

return `${title}\n\n${author}\n\n${image}\n\n${item.description}`;

}).join("\n\n");

const freshMarkdown = items.freshItems.length > 0 ? `## ${freshHeader}\n\n${freshItemsMarkdown}\n\n` : '';

const restItemsMarkdown = items.restItems.map((item) => {

return `* [${item.title}](${item.link}) av ${item.authorName}, ${emailFormatPubDate(item.pubDate)}`;

}).join("\n");

return `${freshMarkdown}## ${restHeader}\n\n${restItemsMarkdown}`;

}

function emailFormatItemsAsMarkdown(articlesItems, blogItems) {

const articlesMarkdown = emailFormatSectionAsMarkdown(articlesItems, configGet("email_latest_articles_header"), configGet("email_earlier_articles_header"));

const blogMarkdown = emailFormatSectionAsMarkdown(blogItems, configGet("email_latest_blog_header"), configGet("email_earlier_blog_header"));

const markdown = articlesMarkdown + "\n\n" + blogMarkdown;

return markdown;

}Apart from Markdown, no code has been related to Buttondown so far, but that’s about to change. We need to post the Markdown to the Buttondown API!

I haven’t created a testing newsletter for this. I probably should, but I worry about the complexity that would add. For now I am using the Buttondown draft feature for testing. In the BabyDriver configuration I can easily switch to draft mode and test most of the send script end-to-end. The part I cannot test is the sending of “real” emails (duh). The Buttondown API documentation is a bit dense, so this took me quite a while to get right (“right” as in “the correct behavior”, I’m still pretty sure the code could be factored better). Without the speedy support from Buttondown I wouldn’t have launched the newsletter as quickly as I did. The stellar support level is another thing making Buttondown almost incomparably better than Mailchimp. Anyway, among the things I needed to figure out was that

For the non-draft emails only one API call is needed, and for draft emails it takes two API calls. All to the same endpoint:

https://api.buttondown.email/v1/emails.The non-draft API call should have no

status.The first draft API call should have a

statusofdraft, and otherwise be the same as that for real emails. I.e. the email payload should be included here.The second draft API call should have no

status, and the payload should be therecipientsof the draft email.

Here goes (if you see how this could better express the intentions I just described, please don’t hesitate to let me know. Perhaps by tagging me in a tweet/post on X, including a link to this article and your refactoring tips?)

function emailPostMarkdown({ markdown, description, now, secondaryId, isDraft, apiToken }) {

const payload = {

"body": markdown,

"subject": `${configGet('email_subject')} ${emailFormatPubDate(now)}`,

"description": description,

"email_type": "public",

"secondary_id": secondaryId,

};

const options = {

"method": "post",

"headers": {

"Authorization": "Token " + apiToken,

},

"contentType": "application/json"

};

if (isDraft) {

const draftPayload = { ...payload, "status": "draft" };

const draftOptions = {

...options,

"payload": JSON.stringify(draftPayload)

};

const draftResponseInstance = UrlFetchApp.fetch(`${EMAIL_CONFIG.BASE_URL}/v1/emails`, draftOptions);

const draftResponseText = draftResponseInstance.getContentText();

console.log('draftResponseText:', draftResponseText);

const draftResponse = JSON.parse(draftResponseText)

console.log('draftResponse:', draftResponse);

const draftId = draftResponse.id;

console.log('draftId:', draftId);

if (!draftId) {

throw new Error(`Something went wrong creating the email. Aborting.\n ${draftResponseText}`);

}

const sendPayload = { 'recipients': configGet('email_draft_subscribers') };

const sendOptions = { ...options, "payload": JSON.stringify(sendPayload) };

const sendResponse = UrlFetchApp.fetch(`${EMAIL_CONFIG.BASE_URL}/v1/emails/${draftId}/send-draft`, sendOptions);

console.log('sendResponse: ', sendResponse.getContentText());

return [draftResponseInstance, sendResponse];

} else {

const sendPayload = { ...payload }; // No special payload here for now

const sendOptions = { ...options, "payload": JSON.stringify(sendPayload) };

const sendRequest = UrlFetchApp.getRequest(`${EMAIL_CONFIG.BASE_URL}/v1/emails`, sendOptions);

console.log(sendRequest);

const sendResponseInstance = UrlFetchApp.fetch(`${EMAIL_CONFIG.BASE_URL}/v1/emails`, sendOptions);

console.log('sendResponse: ', sendResponseInstance.getContentText());

return [sendResponseInstance, null];

}

}We’re almost ready to send the emails. But first, let’s have a look

at the arguments to emailPostMarkdown. There are two things

here that we haven’t seen code for yet: the secondaryId,

and the apiToken.

Buttondown uses the secondary_id to create the titles of

links to the emails. It should be an integer, and what makes the most

sense is a counter starting from 1. I use a sheet in the

document for a log of sent emails (sheets make wonderful little

databases!). Here are the functions used to append and read from this

log:

function appendToLog(logSheetName, { id, secondary_id, title, sent }) {

const logSheet = SpreadsheetApp.getActiveSpreadsheet().getSheetByName(logSheetName);

logSheet.appendRow([id, secondary_id, title, sent]);

}

function getLastLogEntry(logSheetName) {

const logSheet = SpreadsheetApp.getActiveSpreadsheet().getSheetByName(logSheetName);

const lastRow = logSheet.getLastRow();

if (lastRow <= 1) return null; // Assuming the first row is a header

const lastEntry = logSheet.getRange(`A${lastRow}:D${lastRow}`).getValues()[0];

return {

id: lastEntry[0],

secondary_id: lastEntry[1],

title: lastEntry[2],

sent: lastEntry[3]

};

}For the apiToken, things get a bit tricky. I don’t want my friends

using the script to have to keep the token and paste it every time it is

their turn to send the newsletter. Apps Scripts can’t access

localStorage. It does have some other persistence options

though, and amongst them something similar to, but even better than,

localStorage: Document Properties. As its

name implies, properties stored here are tied to the document. Meaning

that I can set it on the document, and when my friends run the send

script it will read the properties, so they don’t even need to bother

with it once. I still need to trust them with the keys, of course, even

if they probably will never see them. But these friends I would trust

with my cut-out heart, so that is fine! I only need to make sure access

to the script is restricted to this tight group of trustworthy friends.

I guess that for some organizations, they need to turn to Google Cloud

secrets storage or something equally tight.

Anyhoo, here are the functions, in the interest of full source code disclosure:

function configSetToken(key) {

const scriptProperties = PropertiesService.getDocumentProperties();

const response = SpreadsheetApp.getUi().prompt('Token');

if (response.getSelectedButton() === SpreadsheetApp.getUi().Button.OK) {

scriptProperties.setProperty(key, response.getResponseText());

}

}

function configGetToken(key) {

var scriptProperties = PropertiesService.getDocumentProperties();

var token = scriptProperties.getProperty(key);

return token;

}

function emailGetToken() {

return configGetToken('EMAIL_TOKEN');

}

function emailSetToken() {

configSetToken('EMAIL_TOKEN');

}When running the emailSetToken function (only I will be

doing that, remember?) I get presented with an input prompt in the tab

with the Sheets document. I paste the keys there and they will get

stored somewhere on Google servers for safe retrieval of whoever runs

the script in the same document instance. Have I mentioned that Apps

Scripts are executed on the server, btw? This isn’t immediately obvious

when writing and using the scripts. It really “feels” like it happens

client side.

Now, finally, we are ready to actually send the emails! I think we have addressed all concerns for this function already:

function emailSendLatestItems() {

const lastLogEntry = getLastLogEntry(configGet('email_log_sheet'));

const newSecondaryId = lastLogEntry ? parseInt(lastLogEntry.secondary_id, 10) + 1 : 1;

const [articlesRss, blogRss] = emailFetchFeeds(configGet('email_article_rss_url'), configGet('email_blog_rss_url'));

const [articlesItems, blogItems] = emailItemsFromRss(new Date().getTime(), articlesRss, blogRss);

const markdown = emailFormatItemsAsMarkdown(articlesItems, blogItems);

const [responseInstance] = emailPostMarkdown({

markdown,

description: `${configGet('email_description')} -- ${articlesItems.freshItems[0].description} -- ${articlesItems.freshItems[1].description}`,

now: Date.now(),

isDraft: configGet('email_is_draft'),

apiToken: emailGetToken(),

secondaryId: newSecondaryId

});

const responseText = responseInstance.getContentText() !== '' ? responseInstance.getContentText() : '{}';

const response = JSON.parse(responseText);

if (response['code']) {

console.error(`Something went wrong:\n ${response}`)

return;

}

appendToLog(configGet('email_log_sheet'), {

id: response.id,

secondary_id: newSecondaryId,

title: response.subject,

sent: new Date().toISOString()

});

if (configGet('email_is_draft')) {

console.info(`Email draft sent. Recipients: ${configGet('email_draft_subscribers')}.`);

return;

}

console.info(`Email sent. API response:\n${response}`);

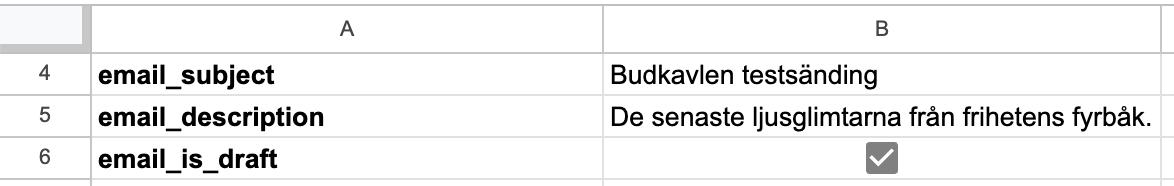

}To use this code out-of-the-box, you’ll need to create a document with two sheets:

BabyDriver Config (you can name it whatever you want, as long as you update the

configGetfunction) this sheet should have the following named ranges:email_subject: Used for the subject of the email. It will be followed by the publishing date

email_description: Will be used as metadata description of the email’s web page when it is shared on social media

email_is_draft: A boolean determining if the email should be sent as a draft or not (as discussed above)

email_draft_subscribers: A multi-column range with email addresses that should receive draft emails

email_log_sheet: The name of the sheet that should be used for the Send log (see below)

email_article_rss_url: The link to the RSS for the Article section of the email. (This is probably the part you will want to customize first, possibly you have only one section, or more than two.)

email_blog_rss_url: Ditto for the Blog section

email_latest_articles_header: The sub header for the fresh part of the Articles section of the email. (Something like “Latest articles”)

email_latest_blog_header: Ditto, Blog section

email_latest_articles_days: The max age in days for an Article to be considered fresh

email_latest_blog_days: Ditto, Blog section

email_earlier_articles_header: The sub header for the rest part of the Articles section

email_earlier_blog_header: Ditto, Blog section

email_earlier_articles_count: The number of articles to be listed in the rest part of the Articles section

email_earlier_blog_count: Ditto, Blog section

Send log, a sheet named the same as whatever you configured above. The first row in the sheet should have the headers:

id,secondary_id,title, andsent

Phew! That’s a wrap! But it is also all source code of a

fully automated, and customized, RSS based newsletter. Well, minus

a lot of _test_ functions. I might put the full script in a

public repository. Please tell me to do that.

Another thing about the code: Apps Scripts lets you split the code

over several files, but these are not modules, or namespaces or

anything. So that’s why all functions are prefixed with

email here, because there are also publishing

functions.

Mentioning other functions… actually there are also functions for the backing up of subscriber information. My experience with Mailchimp deleting my subscribers database has left deep scars in me, so there are functions for downloading the subscriber email addresses from Buttondown, storing them in a sheet in the same document. It works for our small subscriber list, and probably a bit beyond that. I’ll include this script in that public repository that you are encouraged to nag me about to create.

Do you think I am cheating, calling this “a fully automated, RSS based, newsletter” when obviously we are to send the newsletter out manually every week? If you are a programmer you probably get the shivers thinking about manual steps like that, and all the ways this will break. This story is almost ending badly, you say?

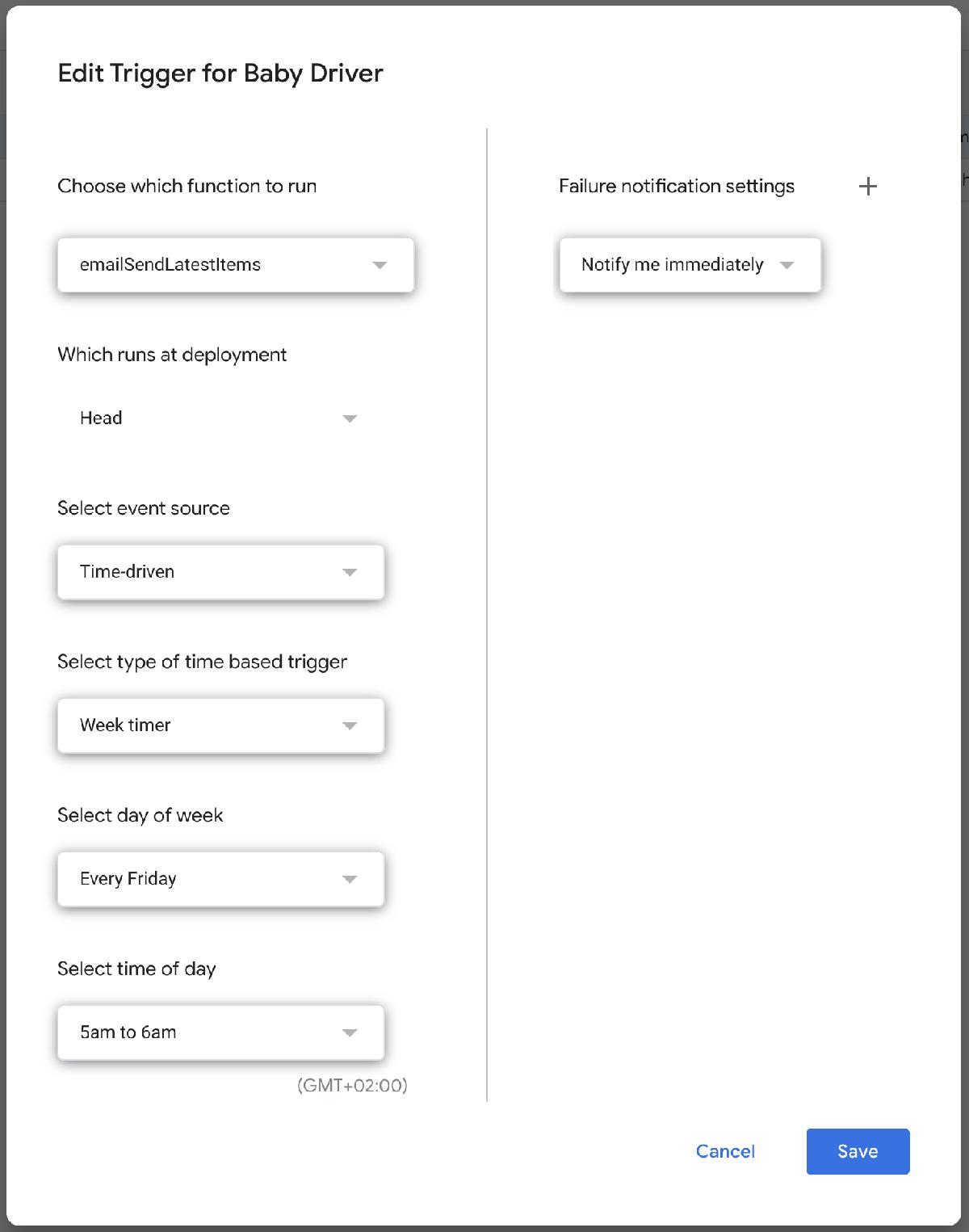

Hah! This is the plot twist I promised above. What I didn’t know setting out to write this script was that Google Apps Script actually supports running any script function through some different types of triggers. Here’s the trigger configuration for this function:

In addition to Time-driven, triggers can be events in the document and also things happening in a Google Calendar.

The subscriber information backup function is also set to happen on a trigger, so that is a fully automated nightly backup. No story should ever end badly! Except if you use Mailchimp. Those stories can end in tears, unfortunately.